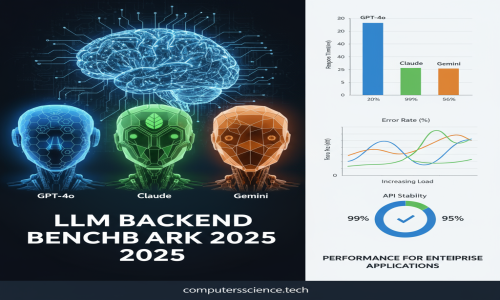

In 2025, Large Language Models (LLMs) like GPT-4o, Claude, and Gemini have become powerful tools for backend engineers. Whether you're building AI-driven APIs, automating documentation, processing large codebases, or handling natural language queries, choosing the right LLM for your backend matters a lot.

In this post, we compare the top 3 LLMs for backend developers based on:

-

⚡ Speed & response time

-

💾 Context handling

-

💰 Cost efficiency

-

🧠 Code and reasoning quality

-

🔁 Throughput for batch jobs

-

🖼️ Multimodal capabilities (images, video, etc.)

✅ Key Benchmark Criteria

| Benchmark | Why It Matters for Backend Systems |

|---|---|

| Latency / Response Time | Impacts UX and synchronous API speed |

| Throughput (Tokens/sec) | Crucial for batch processing & high-load systems |

| Context Window | Determines how large an input the model can handle |

| Token Cost (Input & Output) | Affects scalability and operational budget |

| Output Quality | Impacts accuracy for code, summaries, queries |

| Modality Support | Needed for image/video understanding tasks |

🧪 GPT‑4o vs Claude vs Gemini – 2025 Comparison

| Model | Context Handling | Response Time | Cost | Strengths |

|---|---|---|---|---|

| GPT‑4o (OpenAI) | Up to ~128K tokens, solid at mid/short inputs | Fast for general tasks, may slow on complex inputs | Higher than average for premium usage | Balanced for code, reasoning, and multimodal inputs |

| Claude (Anthropic) | Handles very long contexts (~200K tokens) extremely well | Slightly slower in deep reasoning but very accurate | Premium pricing for high-quality outputs | Excellent at code quality, logic-heavy tasks |

| Gemini (Google) | Extremely large context (in Pro & Flash versions) | Gemini Flash is very fast; good for bulk tasks | Competitive pricing; cost-effective at scale | Great for multimodal inputs and document processing |

🛠️ Use Case Breakdown

| Use Case | Best Model | Why |

|---|---|---|

| Fast, real-time API suggestions | GPT‑4o, Gemini Flash | Lower latency, optimal for small inputs |

| Long document or code analysis | Claude, Gemini | Excellent context retention |

| Code generation or debugging | Claude, GPT‑4o | Higher accuracy and structured output |

| Processing images or multimodal input | Gemini, GPT‑4o (Vision) | Native support for images, video |

| Bulk processing, high-volume tasks | Gemini Flash | High throughput, lower cost |

| Cost-sensitive operations | Gemini, selective Claude use | Best trade-off between cost and performance |

🧑💻 How to Benchmark for Your Project

Here's a step-by-step approach to benchmark models in your backend:

-

Define input size — How many tokens do your inputs typically use?

-

Measure latency — Time to first token and full response.

-

Check throughput — Measure processing speed for batches.

-

Review output quality — Look at accuracy, hallucinations, and completeness.

-

Calculate total cost — Include both input + output token cost.

-

Simulate edge cases — Huge inputs, bad data, broken prompts.

-

Monitor in production — Analyze logs, latency trends, and cost over time.

⚖️ Summary Recommendations

| Scenario | Best LLM |

|---|---|

| Need speed + low latency | Gemini Flash, GPT‑4o |

| Processing massive documents | Claude, Gemini |

| Code-first applications | Claude, GPT‑4o |

| Working with images/media | Gemini, GPT‑4o Vision |

| Cost-conscious scaling | Gemini, selectively Claude or GPT-4-turbo |

💡 Final Thoughts

Choosing the right LLM isn’t about who’s the smartest — it’s about what fits your backend use case.

-

Use Claude when quality and reasoning matter.

-

Use Gemini when you want speed and cost-efficiency for large-scale tasks.

-

Use GPT‑4o when you need a reliable all-rounder with great support and tooling.

Most teams will benefit from using multiple LLMs depending on the context:

-

Quick responses → Gemini Flash

-

Complex logic → Claude

-

Multimodal tasks → GPT-4o Vision