📚 Book Recommendation System Using OpenAI Embeddings And Nomic Atlas Visualization

We’ll build a semantic book recommendation system powered by OpenAI text embeddings, and visualize the results using Nomic Atlas — a powerful tool for interactive visualization of high-dimensional data.

We'll walk through:

-

Preparing the dataset (📁 from Kaggle)

-

Generating embeddings with OpenAI API

-

Performing semantic search using cosine similarity

-

Visualizing book relationships using Nomic Atlas

📥 Step 1: Download the Dataset

We’ll use the Goodreads Books dataset available on Kaggle:

👉 Download from Kaggle

Once downloaded, load it into a pandas DataFrame:

🧠 Step 2: Estimate Embedding Token Cost

Before generating embeddings, it’s good to estimate token usage and cost:

-

This uses OpenAI's

text-embedding-ada-002which costs $0.0004 per 1K tokens.

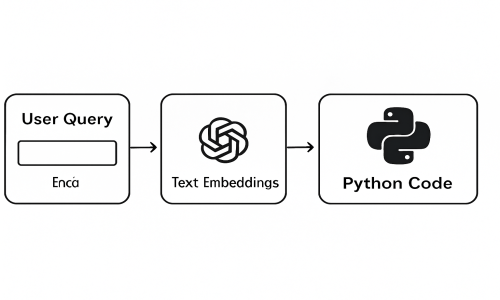

🤖 Step 3: Generate Embeddings Using OpenAI

Set up OpenAI's new SDK interface:

Apply it to your DataFrame and save the results:

🔁 Step 4: Load Embeddings for Recommendation

🎯 Step 5: Recommend Books Using Cosine Similarity

Define cosine similarity and a recommendation function:

🔍 Example:

🌍 Step 6: Visualize Embeddings with Nomic Atlas

Install Nomic:

Prepare the data and embeddings for visualization:

Now send it to Atlas:

After a few moments, Atlas will give you a link to explore your interactive book map — similar books will cluster together!

📌 What You’ve Built

✔ A semantic book search that understands meaning, not just keywords

✔ Recommender system using OpenAI embeddings

✔ Interactive map of books using Nomic Atlas

✔ End-to-end pipeline from dataset → embeddings → visualization

💡 Final Thoughts

Semantic search opens up new ways to explore data. With just a few tools:

-

OpenAI for embeddings

-

NumPy for math

-

Pandas for data handling

-

Nomic Atlas for beautiful visualization

…you can build real-world AI systems in just a few lines of code.

🔗 Resources