In 2025, Large Language Models (LLMs) like GPT‑4, Claude, and Gemini are transforming how backend developers write, test, deploy, and document code. With the rise of smart IDEs like Cursor, integrating AI into your development and CI/CD workflows has never been easier.

From writing unit tests to managing CI pipelines and infrastructure-as-code (IaC), LLMs are reshaping what backend productivity looks like.

In this blog, we’ll explore:

-

🧪 How AI can write & review backend unit tests

-

📑 Auto-generating Swagger/OpenAPI documentation

-

⚙️ Using Cursor and GitHub Copilot for CI/CD and IaC

1️⃣ Writing and Reviewing Unit Tests with AI

🔍 What's Possible?

-

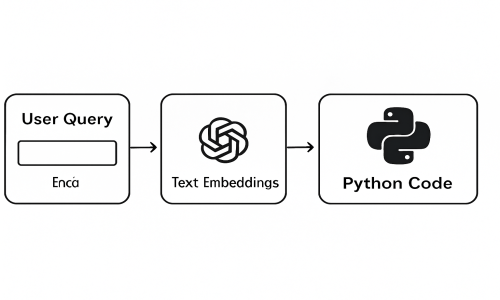

LLMs can auto-generate unit tests by analyzing your function signatures, expected behaviors, and comments.

-

In Cursor, you can select a function → right-click → “Ask AI to generate tests,” and it instantly creates PyTest, JUnit, or Jest cases.

-

Tools like Pythagora also offer test scaffolding from runtime behavior or code context.

-

Academic research (e.g., ChatUniTest) enhances this by combining generation → validation → fix cycles.

✅ Benefits

-

No more boilerplate: generate tests inside Cursor with one click

-

Improve test coverage and edge case handling

-

Faster adoption of Test-Driven Development (TDD)

-

AI-generated tests help catch silent bugs

⚠️ Challenges

-

Tests may not perfectly match real behavior

-

Human validation is still crucial

-

Might increase pipeline time if tests are LLM-generated on commit

-

AI can misinterpret side effects or stateful logic

2️⃣ Auto-Generating Swagger/OpenAPI Docs

🔍 What's Possible?

-

LLMs can extract endpoint logic and generate full OpenAPI (Swagger) specs.

-

With Cursor, select your API controller file → ask AI to "Generate OpenAPI Docs" → it creates the YAML spec inline.

-

Tools like APItoolkit and Workik AI support generation from live traffic or static analysis.

✅ Benefits

-

Keep docs up-to-date with live code

-

Frontend, QA, and external devs understand APIs faster

-

Generate mock servers and client SDKs from the same source

-

Cursor can even turn OpenAPI specs into typed API clients

⚠️ Challenges

-

Auto-generated docs may miss edge case behaviors

-

Risk of exposing private/internal endpoints

-

Custom logic (e.g. authentication flows) often needs manual intervention

3️⃣ GitHub Copilot + Cursor + CI/CD + Infrastructure-as-Code (IaC)

🔍 What's Possible?

-

In Cursor, you can scaffold:

-

GitHub Actions workflows

-

Dockerfiles

-

Terraform/Pulumi scripts

-

Bash deploy pipelines

-

-

Copilot can help fill in CI/CD configs, test runners, and security scan steps.

-

You can even simulate deployments or write IaC with AI suggestions in your IDE.

✅ Benefits

-

Instant infra templates via Cursor AI assistant

-

Faster onboarding for DevOps and backend engineers

-

Prevents common errors (syntax, resource misconfigurations)

-

Encourages use of versioned and repeatable infrastructure

⚠️ Challenges

-

Generated IaC must be audited manually

-

Open security groups, overprovisioned instances, or exposed secrets can slip through

-

Don’t deploy AI-generated infra blindly — always test in a sandbox first

🛠 Best Practices for Using LLMs with Cursor & CI/CD

| Best Practice | What It Means in Cursor |

|---|---|

| 🧠 Prompt clearly | When asking Cursor AI to generate code/tests, be specific about edge cases and expected behavior |

| 🔄 Use AI loops | Let Cursor generate → run tests → fix broken output automatically |

| 🔒 Review IaC manually | Don't deploy Copilot/Cursor-generated infra to production without approval |

| 💸 Monitor cost | If you're using model APIs (like OpenAI), avoid repeated generation inside pipelines |

| ✅ Validate everything | Use pre-commit hooks or CI checks to lint/test AI-generated code |

| 🚦 Human in the loop | Always have a developer review outputs before merging to main branch |

🧪 Real Tools You Can Try Today

| Use Case | Tool |

|---|---|

| IDE-based AI assistance | Cursor |

| Codegen for tests | Pythagora |

| API Docs from traffic | APItoolkit |

| CI/CD + IaC scaffolding | GitHub Copilot |

| Docs & SDK generator | Workik AI |

💡 Sample Cursor Use Cases

-

Ask AI: “Write unit tests for this function using PyTest”

-

Generate: “Create a GitHub Actions pipeline to run lint & tests”

-

Fix: “Why is this test failing?” → Cursor explains the failure and suggests a fix

-

Document: “Generate OpenAPI spec from this Flask/Django route”

-

Infra: “Generate a basic Terraform config to deploy this Node.js app on AWS”

🏁 Conclusion

LLMs are no longer just assistants — they are productivity multipliers in the backend workflow. IDEs like Cursor bring this power directly to your fingertips, letting you move from code to deploy faster and smarter.

Write better tests.

Document faster.

Automate deployment.

Focus on what truly matters: your business logic.