AI APIs like OpenAI’s GPT-4, Anthropic's Claude, or Google's Gemini are powerful tools — but using them in production environments isn’t as simple as just calling .send() from your backend.

To make these models useful in real-world applications, developers must build layers around them that are:

✅ Secure

✅ Scalable

✅ Cost-efficient

✅ Production-resilient

In this blog, we’ll explore how to build production-grade integrations with AI APIs by covering:

-

🔐 Securely integrating LLM APIs (OpenAI, Claude, etc.)

-

🚦 Building rate-limited proxy layers

-

⏱ Handling long-running tasks with queues and webhooks

Let’s dive in.

🔐 1. Secure Integration of AI APIs in Production

🔒 Why Security Matters

-

AI APIs typically require private API keys

-

These APIs often return user-generated content, which may have compliance or moderation needs

-

Overuse can lead to unexpected costs

✅ Best Practices

1.1 Environment Variables

Never hardcode your API keys. Use environment variables (.env) and secure secret managers like:

-

AWS Secrets Manager

-

Vault by HashiCorp

-

GCP Secret Manager

1.2 Server-Side Calls Only

Expose only your own API endpoints to the frontend — not the actual LLM API directly. Example:

1.3 Audit & Logging

Always log:

-

Prompt/request metadata (not user data)

-

Tokens used

-

Time taken

-

Any failure or moderation flags

Use structured logs with tools like:

-

Datadog

-

Logtail

-

Winston / Pino

🚦 2. Building Rate-Limited Proxy Layers

🧠 Why You Need a Proxy

AI APIs are expensive and rate-limited (e.g., 3 RPM or 10k tokens/min). In production, you must:

-

Prevent abuse (both accidental & malicious)

-

Enforce quotas per user/org

-

Monitor and throttle requests

🧰 Build a Rate-Limiting Middleware

Here’s a basic Redis-based implementation:

Then apply this to your API route handler.

🛠 Optional Features

-

Rate limit by API Key, JWT, or org ID

-

Return quota info in headers

-

Add token bucket strategy for burst flexibility

-

Use OpenAI’s

x-ratelimit-*headers to monitor consumption

⏱ 3. Handling Long-Running AI Tasks with Queues & Webhooks

Sometimes AI tasks are:

-

Too long for a real-time HTTP request (e.g., 30–60s)

-

Expensive, so you want to defer or batch

-

Better suited for event-based triggers (e.g., Slack bot replies, email replies, report generation)

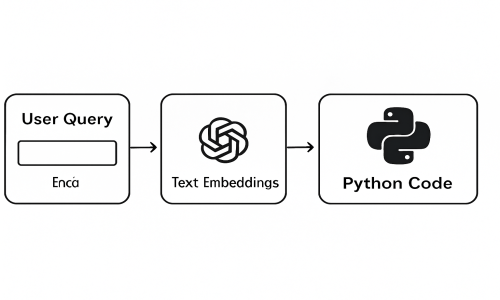

📦 Architecture Overview

🧰 Tools You Can Use

| Task | Tools |

|---|---|

| Queue | BullMQ, RabbitMQ, Resque, AWS SQS |

| Worker | Node.js, Python, Go |

| Webhook/Event | Webhook (REST), Socket.IO, Pub/Sub |

| Notification | Email, Slack, In-app alert |

🔧 Example: Node.js + BullMQ

📊 Bonus: Monitoring & Cost Control

When using AI in production, costs scale fast. Keep control using:

-

✅ Per-user quotas

-

✅ Token tracking (log tokens per request)

-

✅ Usage dashboards

-

✅ Batch low-priority tasks overnight

-

✅ Use lower-cost models (e.g., GPT-3.5-turbo for drafts)

🔐 Key Takeaways

| Layer | What to Do |

|---|---|

| Security | Store keys securely, only server-side usage |

| Proxy | Add rate limits, track usage, prevent abuse |

| Queue | Use background jobs for long tasks |

| Alerts | Notify users via webhook or push |

| Logs | Monitor token cost, failures, usage |

💡 Real-World Use Cases

| Use Case | What AI API Powers It |

|---|---|

| AI Chat Support | GPT-4 via rate-limited endpoint |

| AI Email Summarizer | Queue + Claude 3 Haiku |

| Automated Code Review | Claude via GitHub Webhooks |

| Report Generation | GPT-4 Turbo in background job |

| Slack AI Bot | Claude or GPT via event-driven webhooks |

🏁 Conclusion

Adding AI to your production backend is more than just an API call — it's an engineering problem that spans:

-

Security 🔐

-

Scalability 📈

-

Reliability ⚙️

-

Cost-efficiency 💸

With the right architecture — rate-limited APIs, task queues, and secure environments — you can build stable, powerful AI-enhanced systems for real users.